Recently, I ran into a small, but irritating coding problem. It went something like this.

I was making a graph with a long text label that needed to be spaced (or “wrapped”) across two lines.

Code:

graph +

geom_label(aes(label = str_wrap(label, width = 30)),

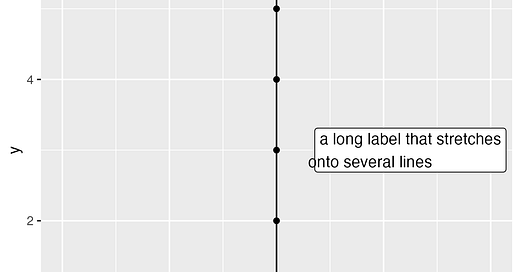

hjust = -0.2)Output:

See the problem? The second line of text is misaligned with the first.

Unsure why this was happening, I sought a solution in two ways.

I made a post on StackOverflow, a popular question-and-answer forum.

I submitted the same post to Claude, Anthropic’s large language model.

Which one do you think was most helpful?

Claude, naturally, was quickest. But the solution Claude first proposed simply didn’t work. Neither did the second or third; although, the (nicely-formatted, annotated) code became increasingly complex with each new prompt. Here is the output from one of Claude’s proposals, suggesting that a monospaced font would fix the misalignment.

Not long after that, I got a response on StackOverflow from “Axeman,” a Swedish biologist and prolific contributor to the StackOverflow forum.

He explained that my error was in using the text justification argument (`hjust = -0.2`) to shift the label to the right. This works on single-line bits of text, but it’s a hacky solution inconsistent with the intended use of the function argument. Instead, Axeman explained that I should use the `nudge_x` argument, like so:

graph +

geom_label(aes(label = str_wrap(label, width = 30)),

nudge_x = 0.1, hjust = 0)This is exactly the output I was looking for.

The StackOverflow user Axeman understood that I was taking the wrong approach by using the wrong function arguments. He understood my real question and answered accordingly.

Claude, the LLM, became stuck in the same rut I was already in—using the incorrect `hjust` argument in its answers instead of switching to the more appropriate `nudge_x` parameter.

This is a trivial example, but I think it illustrates a bigger problem with the current LLMs. They can’t tell you when you’re asking the wrong question.

Every LLM I’ve used begins its responses by rephrasing my question. Their relentlessly cheerful tone stands in stark contrast to StackOverflow, whose human users are notoriously prone to rudeness, especially with novice users who imprecisely frame their questions.

Yet the LLMs ability to rephrase a question isn’t actually a sign that they understand your intention; it’s just the stochastic parrot repeating your question back again.

In hindsight, the best solution to my little coding problem probably wasn’t an LLM or a user forum, but an even older technology. I should’ve just read the software documentation.

We could talk about this for multiple hours over drinks, but in short, you have touched on a lot of what we have been discussing at home. Re: just reading the documentation, sure...until documentation gets incredibly clouded by LLMs. Already documentation for many businesses/orgs is poorly maintained and organized, if it exists (although open source is probably significantly better). Ben's experience in that realm is that many businesses are being sold AI as a solution when the real solution is "hire people to maintain, write, and train documentation", aka, librarians. As for the "real question," yes. I was thinking about this while manually reading crash stats and reports, and from there ended up with a way more interesting question and concern re: what crashes we do/don't "count" towards Vision Zero goals. If I had been using just the raw stats that get sent over to dashboards or had the AI tell me something, I would just...not have thought of some of the issues I analyzed. Human eyes are good! Critical thinking is good!